上一篇爬虫案例讲了数据库的连接,本片来讲利用异步网络框架来连接数据库:Twisted.enterprise中adbapi模块,adbapi中的connectionPool方法是用来创建数据库连接池对象的,每个连接对象在独立的线程当中工作,内部依然是用pymysql等库去访问数据库的;adbapi中的runInteraction方法以异步方式去调用insert_db函数,连接对象中的参数item就会被传给insert_db的第二个参数,等insert_db执行完之后,连接对象会自动调用commit方法。

本片爬取的网站是:http://www.ci.commerce.ca.us/bids.aspx ,我们先建立新的爬虫项目,取名随意,先看items.py:

from scrapy import Item,Field

class Csdn2Item(Item): title = Field() dueDate = Field() web_url = Field() bid_url = Field() #标书链接 issuedate = Field() category = Field() #标书分类

在spiders文件夹下创建Commerce.py爬虫文件:

import scrapy import datetime from scrapy.http import Request from CSDN2.items import Csdn2Item

class CommerceSpider(scrapy.Spider): name = 'commerce' start_urls = ['http://www.ci.commerce.ca.us/bids.aspx'] domain = 'http://www.ci.commerce.ca.us/'

def parse(self, response): # xpath定位 result_list = response.xpath("//*[@id='BidsLeftMargin']/..//div[5]//tr") for result in result_list: item = Csdn2Item() title1 = result.xpath("./td[2]/span[1]/a/text()").extract_first() if title1: item["title"] = title1 item["web_url"] = self.start_urls[0] urls = self.domain + result.xpath("./td[2]/span[1]/a/@href").extract_first() item['bid_url'] = urls yield Request(urls, callback=self.parse_content, meta={'item': item})

def parse_content(self, response): item = response.meta['item'] category = response.xpath("//span[@class='BidDetailSpec']//text()").extract_first() item['category'] = category issuedate = response.xpath("//span[(text()='Publication Date/Time:')]/following::span[1]/text()").extract_first() item['issuedate'] = issuedate expireDate = response.xpath("//span[(text()='Closing Date/Time:')]/following::span[1]/text()").extract_first() # 如果截止日期是open until contracted的话,就默认截止日期是明天 if 'Open Until Contracted'in expireDate: expiredate1 = (datetime.datetime.now() + datetime.timedelta(days=1)).strftime('%m/%d/%Y') else: expiredate1=expireDate item['dueDate'] = expiredate1 yield item

接下来是pipelines.py文件的编写:

# -*- coding: utf-8 -*- from scrapy.conf import settings from twisted.enterprise import adbapi #记得安装twisted包

class Csdn2Pipeline(object): def open_spider(self,spider): db = settings['MYSQL_DB_NAME'] host = settings['MYSQL_HOST'] port = settings['MYSQL_PORT'] user = settings['MYSQL_USER'] passwd = settings['MYSQL_PASSWORD'] #创建连接池对象,用pymysql访问数据库 self.dbpool = adbapi.ConnectionPool('pymysql',host = host,port = port, db= db,user=user,passwd=passwd,charset ='utf8')

def process_item(self, item, spider): #去调用insert_db函数 self.dbpool.runInteraction(self.insert_db,item) return item

def insert_db(self, tx, item): values = ( item['title'], item['dueDate'], item['web_url'], item['bid_url'], item['issuedate'], item['category'], ) try: sql = 'INSERT INTO bids(title,dueDate,web_url,bid_url,issuedate,category) VALUES (%s,%s,%s,%s,%s,%s)' tx.execute(sql, values) print("数据插入成功") except Exception as e: print('数据插入错误:', e)

def close_spider(self,spider): self.dbpool.close()

在settings.py中:

# Obey robots.txt rules ROBOTSTXT_OBEY = False ITEM_PIPELINES = { 'CSDN2.pipelines.Csdn2Pipeline': 300, } # Mysql数据库连接 MYSQL_HOST = 'localhost' MYSQL_DB_NAME = 'db_name' MYSQL_USER = 'root' MYSQL_PASSWORD = '123456' MYSQL_PORT =3306

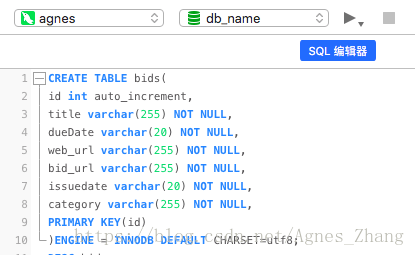

在爬取之前,我们先去navicat 里边先把table建好:

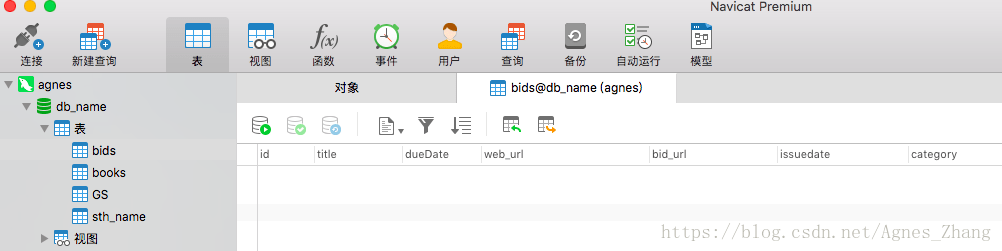

建好之后是这样:

之后我们在terminal中运行代码:

scrapy crawl commerce

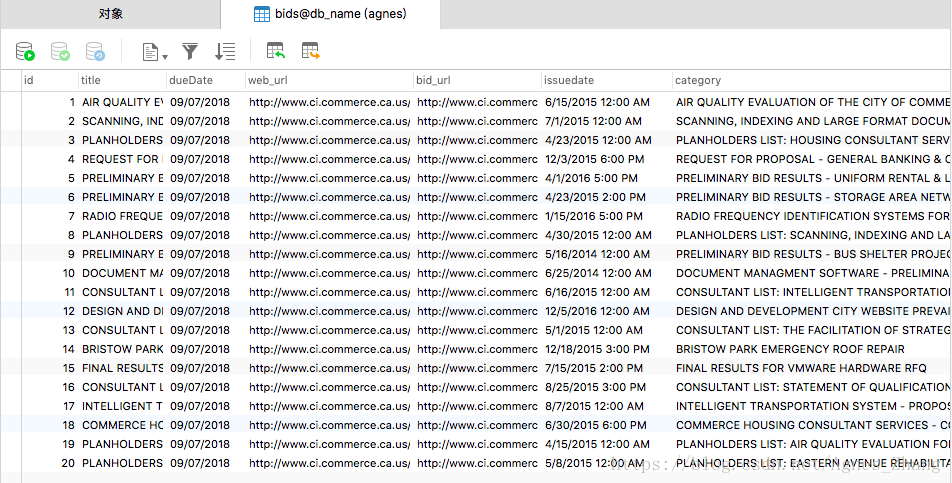

产生的结果如下:

神龙|纯净稳定代理IP免费测试>>>>>>>>天启|企业级代理IP免费测试>>>>>>>>IPIPGO|全球住宅代理IP免费测试