Pycharm爬虫连接SQL Server数据库

接上一篇,爬取的网站还是:https://xxgk.eic.sh.cn/jsp/view/eiaReportList.jsp 在test.py爬虫脚本中编写好需要爬取的数据:

import scrapy from Agnes_test1.items import AgnesTest1Item import re

class KeywordSpider(scrapy.Spider): name = 'test' start_urls = ['https://xxgk.eic.sh.cn/jsp/view/eiaReportList.jsp']

def parse(self, response): result_list = response.xpath("//*[@id='menu']/following-sibling::table/tr") for result in result_list: item=AgnesTest1Item() title= result.xpath("./td[2]/text()").extract_first() department= result.xpath("./td[4]/text()").extract_first() address = result.xpath("./td[6]/text()").extract_first() type = result.xpath("./td[7]/text()").extract_first() startDate= result.xpath("./td[8]/text()").extract_first() endDate = result.xpath("./td[9]/text()").extract_first() if title: item['title'] = re.sub("\xa0","",title) item['department']=re.sub("\xa0","",department) item['address'] =re.sub("\xa0","",address) item['type']=re.sub("\xa0","",type) item['startDate']=re.sub("\xa0","",startDate) item['endDate']=re.sub("\xa0","",endDate) yield item

接下来是pipelines.py,在这里将爬取的数据写入到SQL server数据库中:

import pymssql

class AgnesTest1Pipeline: def open_spider(self, spider): #数据直接导入sql server数据库 self.conn = pymssql.connect(host='localhost', port='1434', user='sa', password='123', database='agnes',charset='utf8') self.cursor = self.conn.cursor() print("数据库连接成功")

def process_item(self, item, spider): try: self.cursor.execute( "INSERT INTO dbo.project(title,department,address,type,startDate,endDate) VALUES (%s,%s,%s,%s,%s,%s)", (item['title'], item['department'], item['address'], item['type'], item['startDate'], item['endDate']))

self.conn.commit() print("数据插入成功") except Exception as ex: print(ex) return item

def close_spider(self, spider): self.conn.close()

最后是settings.py:

ITEM_PIPELINES = { 'Agnes_test1.pipelines.AgnesTest1Pipeline': 100, }

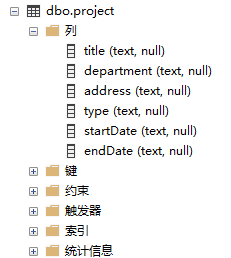

然后先别着急运行,先打开SQL server Management Studio,在数据库中新建一个数据库,我建的数据库名字为:agnes,之后在库中建立一个表格名字为project,添加列名,最后左列就成了这样(列的属性瞎选的,请勿吐槽):

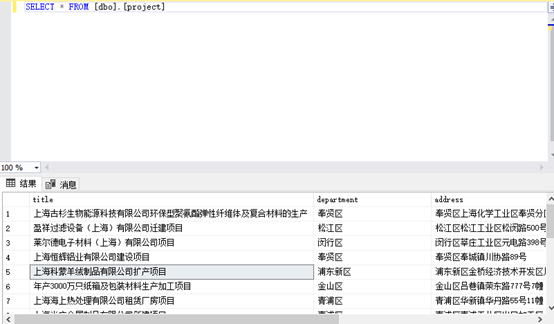

最后在回到pycharm界面,在terminal中运行:scrapy crawl test,打开数据库查询,导入成功:

神龙|纯净稳定代理IP免费测试>>>>>>>>天启|企业级代理IP免费测试>>>>>>>>IPIPGO|全球住宅代理IP免费测试