1. 安装scrapy部分不细讲了, 要装vs环境的, 尝试了很多方式最后都不行, 最后还是老老实实花几个小时装下吧;

2. 客户端创建scrapy项目

scrapy startproject chinaDaily

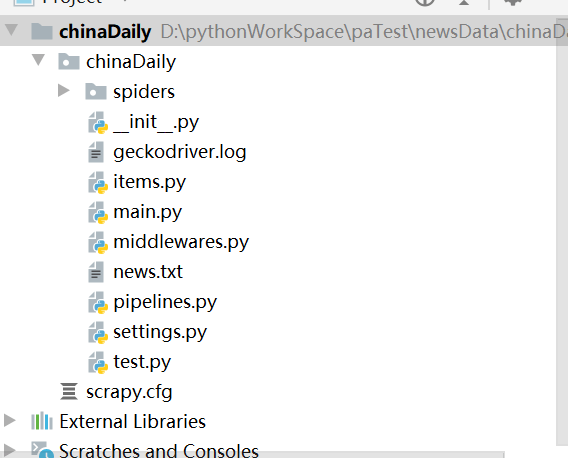

3. pycharm打开项目后创建pychon package, 会自动生成一个scrapy.cfg和__init__.py

4. 创建后项目结构

5.修改setting.py

ITEM_PIPELINES = { 'chinaDaily.pipelines.ChinadailyPipeline': 300, }

BOT_NAME = 'chinaDaily'

SPIDER_MODULES = ['chinaDaily.spiders'] NEWSPIDER_MODULE = 'chinaDaily.spiders'

DOWNLOADER_MIDDLEWARES = { # 'chinaDaily.middlewares.RandomUserAgent': 543, 'chinaDaily.middlewares.JavaScriptMiddleware': 543, #添加此行代码

# 该中间件将会收集失败的页面,并在爬虫完成后重新调度。(失败情况可能由于临时的问题,例如连接超时或者HTTP 500错误导致失败的页面) 'scrapy.downloadermiddlewares.retry.RetryMiddleware': 80, # 该中间件提供了对request设置HTTP代理的支持。您可以通过在 Request 对象中设置 proxy 元数据来开启代理。 'scrapy.downloadermiddlewares.httpproxy.HttpProxyMiddleware': 100, }

6. pipelines.py

# Define your item pipelines here # # Don't forget to add your pipeline to the ITEM_PIPELINES setting # See: https://docs.scrapy.org/en/latest/topics/item-pipeline.html

# useful for handling different item types with a single interface import json

from itemadapter import ItemAdapter

class ChinadailyPipeline: fp = None

# 重写父类的一个方法:该方法只在开始爬虫的时候被调用一次 def open_spider(self, spider): print('开始爬虫……') self.fp = open('./news.txt', 'w', encoding='utf8')

# process_item专门用来处理item类型对象 # 该方法item参数可以接收爬虫文件提交的item对象 # 该方法每接收到一个item就会被调用一次 def process_item(self, item, spider): title = item['title'] content = item['content'] self.fp.write(title + ':' + content + '\n') return item # 这个item会传递给下一个即将被执行的管道类

def close_spider(self, spider): print('结束爬虫') self.fp.close()

7. middlewares.py

# Define here the models for your spider middleware # # See documentation in: # https://docs.scrapy.org/en/latest/topics/spider-middleware.html import time from urllib import request

from scrapy import signals

# useful for handling different item types with a single interface from itemadapter import is_item, ItemAdapter from scrapy.http import HtmlResponse from selenium import webdriver

class ChinadailySpiderMiddleware: # Not all methods need to be defined. If a method is not defined, # scrapy acts as if the spider middleware does not modify the # passed objects.

@classmethod def from_crawler(cls, crawler): # This method is used by Scrapy to create your spiders. s = cls() crawler.signals.connect(s.spider_opened, signal=signals.spider_opened) return s

def process_spider_input(self, response, spider): # Called for each response that goes through the spider # middleware and into the spider.

# Should return None or raise an exception. return None

def process_spider_output(self, response, result, spider): # Called with the results returned from the Spider, after # it has processed the response.

# Must return an iterable of Request, or item objects. for i in result: yield i

def process_spider_exception(self, response, exception, spider): # Called when a spider or process_spider_input() method # (from other spider middleware) raises an exception.

# Should return either None or an iterable of Request or item objects. pass

def process_start_requests(self, start_requests, spider): # Called with the start requests of the spider, and works # similarly to the process_spider_output() method, except # that it doesn’t have a response associated.

# Must return only requests (not items). for r in start_requests: yield r

def spider_opened(self, spider): spider.logger.info('Spider opened: %s' % spider.name)

class ChinadailyDownloaderMiddleware: # Not all methods need to be defined. If a method is not defined, # scrapy acts as if the downloader middleware does not modify the # passed objects.

@classmethod def from_crawler(cls, crawler): # This method is used by Scrapy to create your spiders. s = cls() crawler.signals.connect(s.spider_opened, signal=signals.spider_opened) return s

def process_request(self, request, spider): # Called for each request that goes through the downloader # middleware.

# Must either: # – return None: continue processing this request # – or return a Response object # – or return a Request object # – or raise IgnoreRequest: process_exception() methods of # installed downloader middleware will be called return None

def process_response(self, request, response, spider): # Called with the response returned from the downloader.

# Must either; # – return a Response object # – return a Request object # – or raise IgnoreRequest return response

def process_exception(self, request, exception, spider): # Called when a download handler or a process_request() # (from other downloader middleware) raises an exception.

# Must either: # – return None: continue processing this exception # – return a Response object: stops process_exception() chain # – return a Request object: stops process_exception() chain pass

def spider_opened(self, spider): spider.logger.info('Spider opened: %s' % spider.name)

class JavaScriptMiddleware(object): def process_request(self, request, spider): # executable_path = '/home/hadoop/crawlcompanyinfo0906/phantomjs' print("PhantomJS is starting…") # driver = webdriver.PhantomJS(executable_path) # 指定使用的浏览器 driver = webdriver.Chrome() driver.get(request.url) time.sleep(1) js = "var q=document.documentElement.scrollTop=10000" i = 1 while (i <= 10): driver.find_element_by_xpath('//*[@id="page_bar0"]/div').click() i = i + 1 driver.execute_script(js) # 可执行js,模仿用户操作。此处为将页面拉至最底端。 time.sleep(3) body = driver.page_source print("访问" + request.url) return HtmlResponse(driver.current_url, body=body, encoding='utf-8', request=request)

8. 创建main.py

# -*- coding: utf-8 -*- import os from scrapy import cmdline

dirpath = os.path.dirname(os.path.abspath(__file__)) # 获取当前路径 os.chdir(dirpath) # 切换到当前目录 cmdline.execute(['scrapy', 'crawl', 'newsToday22'])

9. 创建spider/severalNewsSpider.py

# -*- coding: utf-8 -*- import scrapy import time import numpy import re from chinaDaily.items import ChinadailyItem

class chinaSeveralNewsSpider(scrapy.Spider): name = 'newsToday22' #此name就是main中执行的spider名 allowed_domains = ['www.chinanews.com'] start_urls = ['http://www.chinanews.com/society/']

def parse(self, response): linkList=[] title = response.xpath('//title/text()').extract_first() print(title) div_list = response.xpath('//*[@id="ent0"]/li') print("^" * 20) print(len(div_list)) contentresult = '' for div in div_list: # titleItem = div.xpath('.//div[@class="news_title"]/em/a/text()').extract_first().strip() # content = div.xpath('.//div[@class="news_content"]/a/text()').extract_first().strip() # 返回字符串 # print("$" * 20) # print(content) # item = ChinadailyItem() # item类型的对象 # item['title'] = titleItem # auto为items定义的属性 # item['content'] = content # yield item # 将item提交给管道 link = div.xpath('.//div[@class="news_content"]/a/@href').extract_first() print(link) linkList.append(link)

links = div.xpath('//*[@id="ent0"]/li//div[@class="news_content"]/a/@href').extract() print("$" * 20) print(len(links))

for linkItem in linkList: # url = "http://www.51porn.net" + link # 由于提取出来的url不完整,所以需要拼接为完整的url yield scrapy.Request(linkItem, callback=self.parse_s, dont_filter=True) # 请求下一层url,方法为第二个parse,dont_filter=True的作用是避免有时候新的url会被作用域过滤掉

def parse_s(self, response): print("*" * 20) # print(response.text) print("*" * 20) title = response.xpath('//title/text()').extract_first() print(title) div_list = response.xpath('//div[@class="left_zw"]/p') print("^" * 20) print(len(div_list)) contentresult = '' for div in div_list: content = div.xpath('./text()').extract_first().strip() # 返回字符串 print("+++++++++++++++++++") print(type(content)) if(type(content) == str): print(content) contentresult += content # 将解析的数据封装存储到item类型的对象 item = ChinadailyItem() # item类型的对象 item['title'] = title # auto为items定义的属性 item['content'] = contentresult yield item # 将item提交给管道

神龙|纯净稳定代理IP免费测试>>>>>>>>天启|企业级代理IP免费测试>>>>>>>>IPIPGO|全球住宅代理IP免费测试