个人笔记

# -*- coding: utf-8 -*- import scrapy import re import time from datetime import datetime, date, timedelta from scrapy.http import Request from fzggw.utils import * from fzggw.items import FgwNewsItem from fzggw.save_images import save_img from fzggw.constants import * import snowflake.client from fzggw.replace_emoji import remove_emoji import json

class GjcxGgwSpider(scrapy.Spider): name = 'gjcx_ggw' start_urls = ['http://sc.ndrc.gov.cn//policy/advancedQuery?']

def get_form_data(self, page): return { 'pageNum':f"{page}", 'pageSize':'10', 'timeStageId':'', 'areaFlagId':'', 'areaId':'', 'zoneId':'', 'businessPeopleId':'', 'startDate':'', 'endDate':'', 'industryId':'', 'unitName':'', 'issuedno':'', }

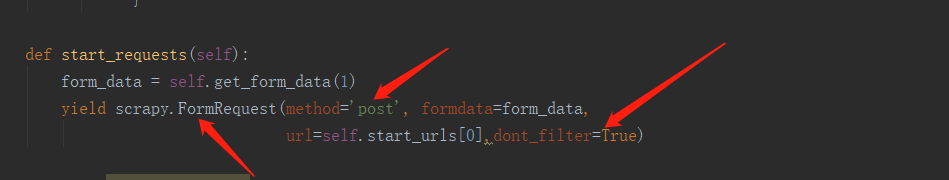

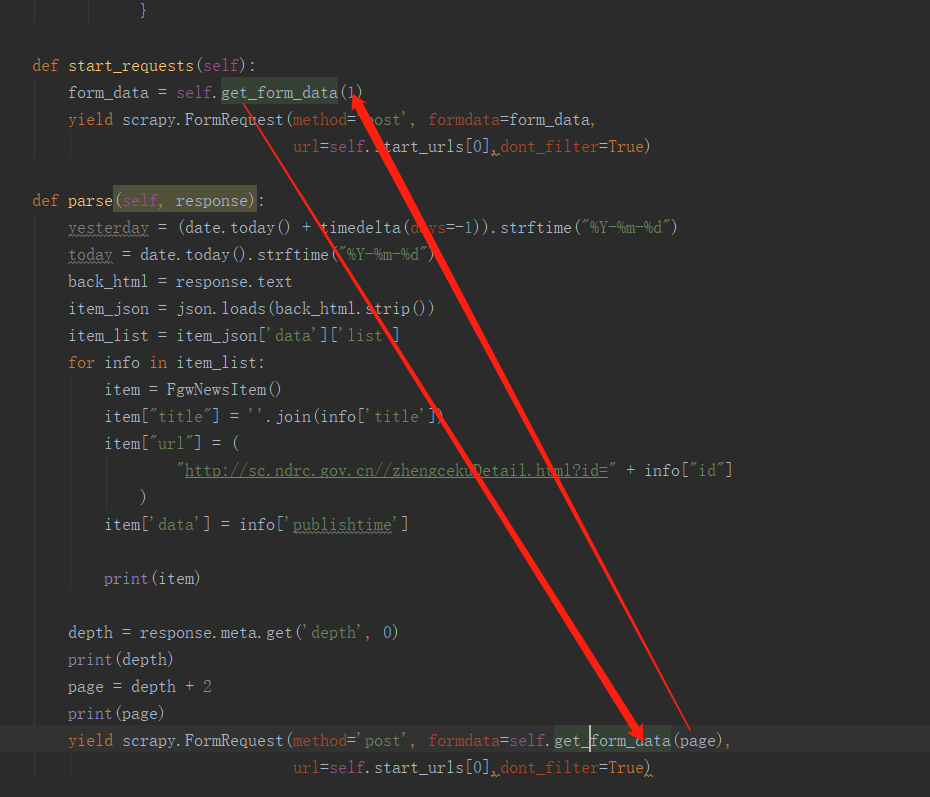

def start_requests(self): form_data = self.get_form_data(1) yield scrapy.FormRequest(method='post', formdata=form_data, url=self.start_urls[0],dont_filter=True)

def parse(self, response): yesterday = (date.today() + timedelta(days=–1)).strftime("%Y-%m-%d") today = date.today().strftime("%Y-%m-%d") back_html = response.text item_json = json.loads(back_html.strip()) item_list = item_json['data']['list'] for info in item_list: item = FgwNewsItem() item["title"] = ''.join(info['title']) item["url"] = ( "http://sc.ndrc.gov.cn//zhengcekuDetail.html?id=" + info["id"] ) item['data'] = info['publishtime']

print(item)

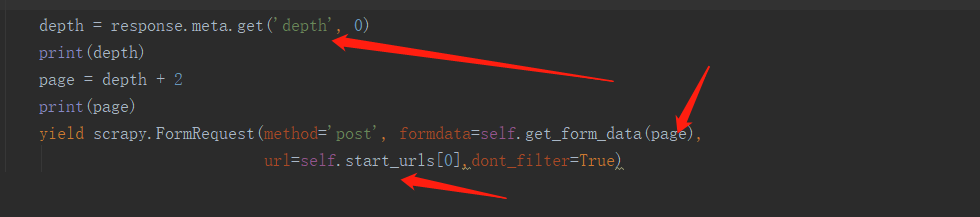

depth = response.meta.get('depth', 0) print(depth) page = depth + 2 print(page) yield scrapy.FormRequest(method='post', formdata=self.get_form_data(page), url=self.start_urls[0],dont_filter=True)

scrapy有可以直接post的scrapy.FormRequest(method=‘post’,不需要指定。当然指定也没错。

dont_filter=True 这个好像是过滤什么的,具体我也忘了。建议带上,不然会出bug。

此为翻页代码

运行

# import time import time import os

from scrapy.crawler import CrawlerProcess from scrapy.utils.project import get_project_settings

process = CrawlerProcess(get_project_settings())

if __name__ == '__main__': while True: os.system('py -m scrapy crawl hunan') os.system('py -m scrapy crawl zhibang') time.sleep(7200)

神龙|纯净稳定代理IP免费测试>>>>>>>>天启|企业级代理IP免费测试>>>>>>>>IPIPGO|全球住宅代理IP免费测试